The 10 Best MCP Servers for Cloud‑Native Engineers in 2025

- Gideon Aleonogwe

- Sep 17, 2025

- 18 min read

Updated: Oct 31, 2025

Cloud-native engineering (and platform engineering) is evolving quickly, and AI is at the centre of that shift.

In the past, when a developer needed something as simple as a new database for testing, the process was slow. They had to raise a ticket, wait for approvals, and depend on manual provisioning, which sometimes took days before they could even start working.

Now picture the same request in 2025.

Instead of waiting, an AI assistant provisions the database instantly, runs compliance checks, and makes it available right away. At least that’s the goal!

MCP servers and platform orchestration

This new AI-based workflow will be powered by a Model Context Protocol (MCP) server, which is a secure bridge that allows AI models to interact with your tools and infrastructure. With MCP, AI stops being just a demo and becomes a reliable part of daily engineering workflows.

And all of this isn’t just theory; we’re already seeing platform teams experiment with MCPs, as shown in this recent Syntasso webinar, “No more YAML? Natural language deployments with Kratix & Claude”.

Providing the features of Kratix and SKE via an MCP server can ensure that your platform is built with speed, safety, efficiency and scalability in mind. In other words, the value of platform orchestration increases as you come to rely on platform services and tools being consumed via MCP servers.

Now, for cloud-native teams, this impact is vast. We’re talking faster delivery, fewer bottlenecks, and safer automation. With dozens of MCP servers emerging across CI/CD, observability, incident response, compliance, and platform self-service, the challenge lies in identifying which ones are production-ready.

This article aims to cut through the noise, explain MCP servers and their role in cloud-native engineering, and highlight the MCP servers that are worth your attention in 2025.

Let’s rewind: What are MCP servers?

Model Context Protocol, or MCP for short, is a standard that enables AI models to communicate with your systems safely and with context. You can think of an MCP server as a translator: it takes your developer tools, data, and workflows and exposes them as clear actions an AI can call.

On the other side, the MCP client is the AI agent that decides when to use those actions. Put simply, the server provides the capabilities, and the client makes use of them. For example, the server might expose “deploy an app,” “run a compliance check,” or “fetch logs,” and the client can call these when needed.

Anthropic, the designers of MCPs, created them as a two-way bridge, with a standard spec to make sure the communication is structured, secure, and reliable. The client first discovers what a server can do, its tool names, input and output schemas, and required permissions, then sends a typed request. The server validates the schema, checks auth, executes the action with its own credentials, and returns a structured result plus an audit record.

For example, if you ask to “fetch logs for checkout in prod”, the client calls the Kubernetes server’s logs tool; the server verifies the namespace and selectors, runs the query, and replies with the logs and a status you can trust.

That is what “structured, secure, and reliable” looks like in practice.

How it fits into daily workflows

In practice, MCPs turn tools into small composable verbs your agent can call safely.

For example, let’s say you ask your agent to “open a PagerDuty incident, link the failing service, and post the summary to Slack”.

One server exposes incident actions. Another exposes Slack posting. Then, the client composes both calls and returns a single result.

You could swap the tools, and the prompt stays the same, or even replace PagerDuty with Jira, GitHub Issues, or a custom SRE runbook server, and the pattern still holds.

For cloud-native teams, MCP servers integrate seamlessly into the fabric of your services, operating as containerised services near your data and controls. You simply need to version, deploy, and expose only the actions your platform permits.

Why MCPs matter to cloud-native engineers

Large language models (LLMs) are powerful, but without schemas to validate and constrain their calls, you get unpredictable results. MCPs address this by providing your agents with safe, structured access to tools through MCP servers.

You expose only the actions you approve of, and each action will get a schema, auth, and guardrail. You can then use them in the following ways and environments:

Kubernetes clusters: MCPs turn cluster operations into explicit capabilities. With them, you can scale a deployment, trigger a rollout, fetch logs for a failing pod, etc. Additionally, you get to keep RBAC, namespaces, and policies exactly where they belong.

The model cannot run arbitrary kubectl on a whim; it can only call the server’s allowed functions, with dry-run, change approval, and rollback built in.

DevOps automation: In automation setups, DevOps engineers use MCPs as the switchboard. The agent can open an incident, attach runbook steps, page on-call, create a Jira ticket, and post to Slack, all through declared tools.

All this without needing webhooks and secrets that you forget to rotate. You get AI-driven cloud-native workflows that are testable, permissioned, and easy to version alongside the rest of your platform.

Observability: MCPs enable the model to ask specific questions about your telemetry data. You can query Prometheus, retrieve traces, and filter logs for a single request path, as well as compare SLO error budgets across different services. From there, you can take action.

If a canary deployment crosses a predetermined threshold, the same workflow can pause or roll back a release through the deployment server. This shifts the focus from using dashboards merely as destinations to utilising them as signals that drive AI workflows for developers in MCPs.

The 10 Best MCP servers for 2025

Now, we’ve talked about what MCPs are and why they’re helpful. In this section, we will give you a curated shortlist of ten (10) MCP servers that prioritise cloud-native solutions.

Each selection includes its function, significance, and current status, allowing integration into effective MCP-AI developer workflows:

Kubernetes MCP server

Argo CD MCP server (Akuity)

Port MCP server

Prometheus MCP server

Grafana MCP server

PagerDuty MCP server

GitHub MCP server

Slack MCP server

Terraform MCP server (HashiCorp)

AWS and Azure MCP servers

Let’s dive into them in more detail!

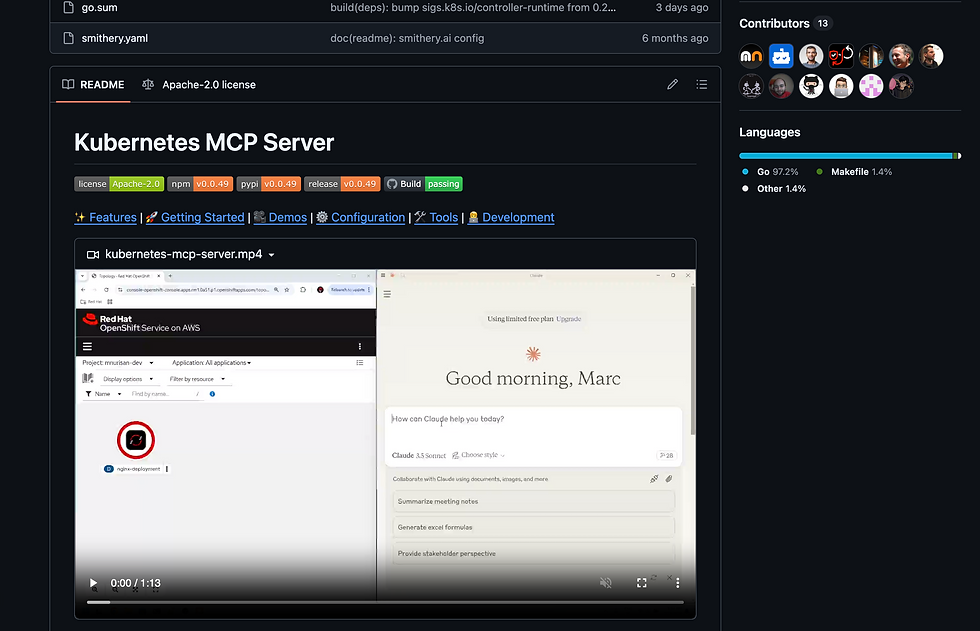

1. Kubernetes MCP server

The Kubernetes MCP server exposes a safe, schema-defined slice of the Kubernetes and OpenShift APIs to your agent. You get least-privilege operations without handing over a kubeconfig or letting the model run arbitrary kubectl. You can use it to triage incidents, surface failing workloads, and execute day-two operations while your RBAC, namespaces, and admission policies remain in control.

Key features

Namespaced, RBAC-aware verbs: You can run safe actions, such as listing or describing resources, fetching pod logs, scaling Deployments, restarting rollouts, and even executing Helm actions, all within the namespaces and roles you’re authorised to access.

Call only what you permit: Read-only and allow-list modes make it easy to restrict exactly which verbs and resources are callable.

Schema validation: Inputs are checked against JSON Schema with server-side validation, and a dry run shows you what will change before anything is applied.

Full audit trail: Every call logged with actor, namespace, verb, and diff, giving you a traceable record for your SIEM.

Policy and safety hooks: OPA or Gatekeeper can be integrated to ensure only compliant changes get through.

Guardrails for risky actions: exec is wrapped behind explicit tools, meaning it can’t be executed without prior authorisation. Additionally, timeouts, non-TTY defaults, and rate limits are in place to prevent misuse.

Example scenario

You’ve updated a ConfigMap and need workloads to pick it up cleanly. You could ask: “Which Deployments in prod reference the checkout-config ConfigMap?”, and follow with: “Roll out a safe restart for those Deployments and tell me when all pods are Ready.”

The client routes the requests to the Kubernetes server, which resolves the references, performs a dry run, applies the restart under your RBAC, and streams the readiness status back with links that you can inspect.

2. Argo CD MCP server (Akuity)

The Argo CD MCP server, maintained by Akuity, provides your agent with safe, schema-defined access to Argo CD, allowing you to query app state and, when permitted, execute GitOps actions without relinquishing cluster-wide control. You keep declarative workflows in Git, Argo stays the source of truth, and the model only calls approved verbs with scoped credentials.

You can use it to list applications, inspect resource trees, compare “desired” versus “live” state, and perform targeted syncs or rollbacks behind policy checks. The server supports standard transports, fits neatly alongside your existing Argo deployment, and is maintained by Akuity, which means clear docs and a visible roadmap.

Key features

Read/write verbs: You can perform core actions, such as listing apps, checking health and sync status, fetching resource trees, refreshing, syncing, or rolling back, where permitted.

Drift awareness: You’ll be able to diff the desired state against the live state, so you can see why an app is out of sync before taking action.

Least-privilege access: You can use tokens and allow-lists to restrict which apps and actions are callable, and you can even switch to read-only mode if you only want safe visibility.

Dry-run checks: You can preview changes in a “sync preview” before they’re applied, so you know exactly what will change.

Audit and traceability: Every call you make is logged with the actor, app, and action, and you can trace it back to the Argo UI whenever you need visibility.

Policy hooks: You can gate write actions like change windows, approvals, or SLO health from your observability stack.

Example scenario

Let’s say you have a service that looks stale after a merge. You could write a prompt like: “List out-of-sync Argo CD apps in production,” and follow with: “Run a targeted sync for the payments app and report its health.”

The client routes both requests to the Argo CD server, which validates permissions, previews the change, performs the sync if allowed, and returns a short status with a link to the app in Argo.

Status: Open source, actively maintained.

3. Port MCP server

The Port Remote MCP server allows you to interact with your entire SDLC from wherever you desire: an AI agent, an AI chat app like Claude, or straight from your IDE. This means you can bring your developer portal’s data and actions into the conversational interfaces you already use to reduce context-switching, increase efficiency, improve DevEx, and make better data-informed decisions.

Use it to query your software catalog, analyze service health, manage resources, and streamline development workflows. Ask questions like, “Who owns this service?” and “Where do bottlenecks occur in my deployment pipeline?” and receive domain-integrated answers with actionable recommendations. This helps you stay focused, and thanks to Syntasso Kratix Enterprise generating Port actions automatically for all Promises, you also stay connected to the most up-to-date platform offerings.

Key features

Query and analysis tools: Ask questions about your SDLC, ownership, policies, and standards directly from your IDE, Slack, or the interface of choice.

Execute actions: Perform any self-service action you have access to in Port. Build new ones, invoke them, and audit their output.

Call AI agents: Run any AI agent or agentic workflow you’ve built inside Port, and evaluate runtime, output, and quality from your IDE.

Create, update, and delete Port structures: Blueprints, scorecards, and entities can all be managed via Port’s MCP server, making it possible to safely vibe-build Port in natural language.

Centrally manage prompts: Port is one of the only MCP servers to leverage the Prompt spec to provide a unified location for defining, storing, and managing reusable prompts. Govern and streamline your best reusable prompts, and easily expose them to platform users.

Example scenario

You want to plan your workday. You start in Cursor and ask, “What’s on my plate?” and Port will return a list of immediate action items based on priority, such as displaying the project “Implement OAuth2 authentication flow,” which is a high-priority task you were in the middle of working on the previous day.

After that, it will list urgent reviews, critical incidents, and other potential tasks or action items you can dive into once work on the OAuth project is complete.

Watch this example and more here.

Status: Actively maintained by Port, currently in open beta.

4. Prometheus MCP server

The Prometheus MCP server provides your agent with a safe and structured way to ask, “Are we healthy?” and receive accurate answers. It exposes PromQL and metadata through clear, least-privileged tools, so you can query time-series data without relying on brittle scraping or shell access.

You can use it to correlate alerts with metrics, check SLOs on the fly, and give your automation the context it needs to act. You can run a community server for vanilla Prometheus, Cortex, Mimir, or Thanos, or use the AWS-Labs variant for Amazon Managed Prometheus. Either way, you keep control of scopes, tokens, and audit.

Key features

PromQL and range queries: Allows you to define metrics with JSON schema inputs, including time windows, step sizes, and label filters, so your queries stay structured and precise.

Helpers for everyday operations tasks: Common checks, such as error rates, latency, burn rates, and SLO budgets, are built in, saving you from having to write the same queries repeatedly.

Scoped visibility: You can maintain security with read-only modes or restrict access to a specific tenant or data source, ensuring the agent never sees more than it should.

Rule and alert visibility: It’s easy to view alerting rules and current alert states, providing a direct link between symptoms and signals.

Result shaping: Outputs can be formatted for whatever comes next, whether that’s tabular summaries, percentile breakdowns, or links back to dashboards.

Operational safety: Safeguards such as rate limits, time-bounded queries, and full audit logs of who asked what and when ensure the system's reliability and accountability.

Example scenario

Let’s say you suspect a spike in errors. You could ask: “Show 5xx rate for the checkout service over the last 15 minutes and compare it to the previous 15,” and follow with: “Is our p95 latency breaching the payments SLO right now?”

The client routes the requests to the Prometheus server, which runs safe, scoped queries and returns structured results you can act on.

Status: AWS Labs docs are available, along with an active community project that was released in August 2025.

5. Grafana MCP server

The Grafana MCP server provides your agent with safe, schema-defined access to dashboards and data sources, without requiring the handover of admin rights. You can search dashboards, list panels, extract the underlying queries, and generate deep links for hand-offs.

When permitted, you can also propose controlled patches for dashboards, ensuring that investigations and evidence remain in one place. You keep your RBAC, folders, and teams exactly as they are; the agent only calls verbs you approve. Ships as binaries and a Docker image, with Helm for cluster installs and helpers for Prometheus and Loki, so setup is quick.

Key features

Find dashboards easily: You can search by name, tags, or folder, and quickly view panels with their details to navigate more efficiently.

See the queries behind charts: Pull the exact PromQL or Loki query a panel is using so you understand where the data comes from.

Share links and snapshots: Create time-scoped links, generate read-only snapshots, and add notes to leave a clear record during incidents.

Make safe edits: Add or tweak panels with built-in validation and a dry-run preview, so you know changes work before applying them.

Control who sees what: Restrict access by folder, namespace, or role. By default, everything is read-only, and you grant extra permissions only when needed.

Keep everything safe and tracked: Rate limits, time windows, and the whole log show who ran queries and made changes, helping you prevent and stay accountable.

Example scenario

Let’s say you need quick evidence during an incident. You could ask: “Find the ‘Checkout SLOs’ dashboard and give me a link to the p95 latency panel for the last 30 minutes.” A good follow-up could be: “Extract the panel’s query and add an annotation that the canary started at 14:05.”

The Grafana server returns the deep link, the query text, and confirms the annotation under your RBAC.

Status: Open source from Grafana Labs with frequent tagged releases in 2025.

6. PagerDuty MCP server

The PagerDuty MCP server exposes incidents, services, on-call schedules, and notes as safe, schema-defined tools your agent can use under least-privilege. You decide which actions are available and when writes are allowed, so the model can fetch context by default and only create or update when you explicitly enable it.

You can use it to assemble incident timelines, page the right responder, add notes that link evidence, and close the loop from chat without brittle bot glue. The official repo ships configurations for VS Code and Claude Desktop and defaults to read-only, which keeps the blast radius small while you pilot.

Key features

Built-in tools for incidents: You can quickly look up services, see who’s on call, read incident details, create new incidents, add notes, or update status (when write tools are enabled).

Access is kept safe by default: Tools start in read-only mode, with per-tool scopes and explicit flags to unlock write access only for selected users or environments.

Incident timeline and evidence: Fetch incident notes, details, and related references so your agent can summarise the impact and include useful links.

Safe automation: Create or update incidents in a way that avoids duplicates, with rate limits and timeouts to prevent automation from overwhelming responders.

Full audit trail: Every call includes identifiers that can be cross-linked in tickets, runbooks, or post-mortems for easy tracking.

Simple client setup: Configs are documented for both local and remote use, featuring token-based authentication and examples for common editors and desktop clients.

Example scenario

Let’s say you’re troubleshooting a checkout spike. You could ask: “Who is on call for the payment service right now?” You could then follow with: “Open a high-priority incident for payments with the title ‘Error rate above threshold’, add a note linking the Checkout SLOs dashboard, and share the incident link.”

The PagerDuty server performs the reads, creates the incident if writes are permitted, records the note, and returns the links under your RBAC.

7. GitHub MCP server

The GitHub MCP server provides your agent with first-party, least-privileged access to repositories, pull requests, issues, and checks, all within your existing enterprise policies. You keep branch protection, CODEOWNERS, push protection and SSO; the model only calls allowed tools with validated inputs.

Use it to open PRs, triage issues, apply labels, request reviews and kick off workflows without custom bots. It’s valuable because almost every platform change touches Git, and native controls are safer than ad-hoc webhooks or tokens scattered across scripts. GitHub documents local and remote modes and exposes organisation- and enterprise-level policy toggles so you can align with compliance from day one.

Key features

Tools for repo work: You can handle everyday tasks like creating branches, opening or updating pull requests, adding comments, setting labels, assigning reviewers, and requesting reviews.

Safe reads: Browse files, fetch diffs, search code, and verify required status gates before merging.

Policy-aware writes: Writes respect protections (branch rules, push restrictions, CODEOWNERS, signed commits, and required reviews) so nothing bypasses policy.

Workflow integration: Trigger or re-run CI jobs, view run logs, and surface required checks directly to the agent.

Scoped credentials: Tokens can be limited to a repo or an organisation. They start read-only by default, with explicit elevation needed for write access.

Audit trail included: Every action comes with identifiers and links, making it easy to trace what happened in pull requests, issues, or pipelines.

Example scenario

You want a tidy fix to production docs. You could ask: “Create a branch docs/fix-typo, edit README.md to fix the spelling of ‘observability’, and open a PR against main with the title ‘Fix typo in README’.” Then follow up with: “Request a review from the platform-docs team and add the docs label.”

The GitHub server performs the reads and writes within your branch and review policies, then returns the PR link and review status.

8. Slack MCP server

The Slack MCP server provides your agent with safe, schema-defined access to channels, threads, and messages, without granting broad workspace privileges. The server supports stdio and SSE transports, channel and thread history, DMs and group DMs, message search, and a safe posting tool that’s disabled by default.

You keep scopes tight and enable writes only when ready. The installer runs with npx and guides you through setup in minutes, which is handy for quick pilots or workshops. Plan for your workspace governance, then roll out with read-first defaults.

Key features

History and threads: You can fetch channel history and full threads, including activity messages, with simple time- or count-based pagination.

Search: Find messages across channels, DMs, and users, using filters like date, author, or content to narrow results.

Controlled posting: The add_message tool is disabled by default. You turn it on explicitly and can limit posting to specific channels.

Multiple transports: The server works with clients over stdio or server-sent events, and it also supports optional proxy setups.

Modes: Choose between OAuth for standard authentication or a “stealth” mode (make sure this fits your security policies before using it).

TUI installer: Set up the server interactively with a single command: npx @madisonbullard/slack-mcp-server-installer.

Example scenario

An SRE is managing an incident and needs a quick update for leadership. They ask: “Summarise the last 30 minutes of messages in #incidents-payments and list any action items.” Then follow with: “Post a two-line update to #exec-briefing with the current impact and the PagerDuty incident link.”

The Slack server retrieves the context within the defined scopes, generates the summary, and posts the update if write access is allowed.

Status: Community-maintained open source, early-stage with active updates, no GA release yet.

9. Terraform MCP server (HashiCorp)

The Terraform MCP server provides your agent with a secure and up-to-date view of providers, modules, and registry data, allowing you to maintain control. The model only sees schemas and permitted actions, not your cloud credentials.

You can use it to scaffold modules, validate attributes, and propose changes that align with current Terraform capabilities. HashiCorp offers documentation for both local and remote setups, as well as integration with Copilot or Claude. The server is in beta, enabling you to pilot it with guardrails in place.

Key features

Live schemas: Provider and module schemas are always generated fresh, so your IaC matches the current registry truth instead of relying on stale training data.

Safe planning: Tools let you read, validate, and plan using JSON Schema inputs. Dry runs are the default, and every write requires clear approval.

Version awareness: You can see available provider versions, spot deprecations, and pin modules to known-good releases.

Linting and formatting: Helpers keep your HCL consistent, with diffs you can review directly in pull requests.

Policy checks: Hook in Sentinel or OPA to block non-compliant plans before they reach production.

Full audit trail: Every request, plan, and outcome is logged, giving platform and security teams visibility into exactly what changed and when.

Example scenario

Let’s say you want a simple S3 bucket with versioning. You could ask: “Generate Terraform for a private bucket named logs-prod, enable versioning, and show me the plan.”

The client calls the Terraform server to scaffold the module, validate attributes against the live AWS provider schema, and run a dry plan. You review the diff, then say, “Open a PR with this change.” The server returns the PR link and an audit entry you can file.

Status: First-party, open source, currently in beta with multiple community walkthroughs and images published.

10. AWS and Azure MCP servers

Both AWS and Azure now provide first-party MCP servers that expose their clouds’ services in a structured, secure way. For cloud-native engineers, this means AI-driven workflows can query, operate, and optimise workloads across the two most widely used hyperscalers — all without bypassing IAM or enterprise guardrails.

Key features

Broad service coverage:

AWS: Documentation search, general API access, CloudFormation, Cloud Control, CDK, EKS/ECS, cost analysis, Bedrock knowledge bases, and more.

Azure: AI Search, Cosmos DB, AKS, ACR, Storage, SQL, Monitor, Log Analytics, and Key Vault.

Secure, policy-aligned access: Both suites use their native identity systems (AWS IAM and Azure Identity), ensuring every action maps directly to existing enterprise authentication and authorisation policies.

Developer-friendly integration: AWS offers one-click installs and client configs for tools like Cursor, VS Code, and Windsurf. Azure integrates tightly with Copilot in VS Code and ships an official extension for fast setup.

Operational helpers:

AWS: Infra tooling for Terraform, CDK, and CloudFormation, with best-practice prompts to keep configurations consistent.

Azure: Natural-language parameter suggestions, ready-to-run tools, and protocol upgrade guidance.

Open source and actively maintained: Both are first-party, open source, and regularly updated. AWS is generally considered production-ready; Azure is in Public Preview and suited to staged pilots.

Example scenario

A platform team wants to review cost efficiency and reliability across its hybrid setup:

Ask the AWS cost server to show current spend for prod, list idle EBS volumes, and draft a cleanup plan.

Ask the Azure server to list AKS clusters, query Log Analytics for 5xx spikes in payments, and rotate a DB_PASSWORD secret in Key Vault.

Together, these results give engineers live insights into spend, workload health, and security posture across both clouds — all within the scope of their assigned IAM or Azure Identity permissions.

Status:

AWS MCP servers: First-party, open source, actively maintained, production-ready.

Azure MCP server: First-party, open source, Public Preview, recommended for staged pilots with read-first scopes.

Emerging “AI in engineering workflows” trends to watch

As the adoption of MCP servers grows, the story is shifting from “can it work?” to “how can we run it well?”

Here are three trends to watch out for as you adopt MCP servers:

Custom MCP servers for internal tools

You are no longer waiting for a vendor plugin. Teams are wrapping in-house systems behind small, well-defined MCP servers, including feature flags, change-management portals, chargeback dashboards, flaky test triagers, and even golden-path scaffolding.

Each server exposes a few verbs with clear schemas. Your agent can request “create canary for checkout at 5 per cent” or “open change window for payments”, and the server turns that into audited, least-privilege actions. Fewer one-off scripts. More reusable capabilities.

Developer workflows will change

Instead of bouncing between Slack, runbooks, and bespoke CLIs, you ask for outcomes and let the client orchestrate across servers. In Kubernetes, this might involve a deployment server planning a canary, an observability server monitoring SLOs, and a Git server opening a PR with the manifest change and rollback plan.

In CI, you can trigger ephemeral environments, seed test data, and collect traces with one request, then promote if guardrails pass. The practical effect is faster onboarding, cleaner hand-offs, and far less glue code. You retain GitOps and RBAC, but gain natural-language workflows that your platform can actually govern.

Standardisation and security will decide who scales

If you want MCP to scale, you need a common language and firm rules. Without them, you run the risk of inconsistent verb usage, which can have surprising side effects.

Start by agreeing on names and JSON Schemas for every action and response, and version those capabilities with the server. That way, clients can understand change and negotiate upgrades.

Only after that should you begin to tighten authentication and authorisation processes. This can range from short-lived tokens to mutual TLS per-tool scopes that limit who can perform what actions and where.

Build with context, ship with confidence

MCPs offer a straightforward approach to translating intent into action. It standardises how AI interacts with code, clusters, and compliance, eliminating patchy integrations. This is why MCPs matter to cloud-native teams.

In this article, we defined MCPs, compared them to real DevOps workflows, reviewed the best servers for 2025, and outlined trends to look out for.

As we saw in the earlier-mentioned webinar on “No more YAML? Natural language deployments with Kratix & Claude”, companies like Syntasso are showing what this future could look like, a future where complex engineering operations are simplified into natural-language requests that still respect governance and security.

For you, where do you begin?

Start small. Prove value on narrow use cases, then expand only when the path is smooth. The ecosystem is moving quickly, so treat today’s wins as a foundation, not the finish line. Keep an eye on portability, versioning, and security reviews as you scale your operations.

Learn more about cloud native and platform engineering:

Comments