Complete Guide to ClusterRole and ClusterRoleBindings

- Okesanya Odunayo Samuel

- Aug 14, 2025

- 13 min read

Updated: Dec 9, 2025

Managing permissions across Kubernetes clusters shouldn't feel like defusing a bomb. Yet, platform teams routinely struggle with Role-Based Access Control (RBAC), especially as they move from managing simple, single-namespace applications to building shared, multi-tenant internal platforms.

In Kubernetes, RBAC policies define who can access what resources and where based on roles and bindings. But getting these permissions right is only part of the challenge; managing them across namespaces and clusters, for different teams and services, adds another layer of complexity.

This is where ClusterRoles and ClusterRoleBindings come in. Unlike their namespace-scoped counterparts (Role and RoleBinding), these resources grant cluster-wide permissions, making them essential tools for platform engineers working at scale.

However, they also come with risks. Misconfiguring them can unintentionally expose sensitive resources to the wrong users. On the other hand, being too restrictive can break key services and hinder developer workflows.

A Red Hat survey of 600 DevOps and security professionals found that 40% of organisations experienced Kubernetes misconfigurations in the past year. Over a quarter of respondents flagged access control issues, including RBAC, as high-risk security threats. In this context, understanding how to use ClusterRoles and ClusterRoleBindings safely and effectively is critical.

This guide walks you through practical usage patterns, complete with hands-on examples you can test in your own clusters. You'll learn when cluster-wide permissions are necessary, how to scope access without breaking platform workflows, and how to avoid common pitfalls while maintaining security.

Prerequisites

To understand ClusterRoles and ClusterRoleBindings, you should first have:

Access to a Kubernetes cluster, such as minikube or kind, for creating and modifying RBAC resources.

Kubectl, configured and connected to your cluster.

Admin access to your cluster (if you created the cluster yourself, you likely already have this).

Basic Kubernetes knowledge, including familiarity with Pods, namespaces, and deployments.

ClusterRole vs Role: understanding the boundaries

Roles and ClusterRoles function differently within a Kubernetes cluster.

The diagram below shows how Roles only function within individual namespaces, while ClusterRoles can access resources across the whole cluster.

Roles

Roles operate within a single namespace. If you create a Role in the development namespace, it can only manage resources in that namespace. This keeps teams working with their own resources and prevents them from changing things that belong to other teams.

Here's a Role that manages Pods in the development namespace:

The specified Role above allows complete management of Pods, but solely within the development namespace.

Applying the manifests

You can apply these Kubernetes manifests in two ways:

Method 1: Save to file and apply.

Method 2: Apply directly from the terminal.

Expected output:

The following are the most common Role patterns for namespace-specific access:

Developer Role for application teams

Development teams need to ship features fast without waiting for operations tickets. They need to create Pods, update deployments, manage their application secrets, and troubleshoot issues within their namespace boundaries.

The following is an example of a developer Role that provides the essential permissions teams need for day-to-day application management.

The Role above provides developers with complete control over application resources within their namespace, especially in a dev or staging environment.

The inclusion of secret access is intentional, as developers need to create and manage secrets for databases, API keys, and TLS certificates. Without this access, they would have to request secrets from operations teams for each deployment.

However, depending on your operational culture or environment, such as a production environment, you can remove the secret access from the resources list.

Read-only access for stakeholders

Product managers, business stakeholders, and audit teams require visibility into what is running in production, but shouldn't be able to modify anything. They need to see deployment status, check configurations, and monitor system health for their reports.

Here's a viewer Role that provides read-only access to production resources:

Notice that this Role above intentionally excludes secret access because stakeholders don't need to view sensitive configuration data.

The watch verb enables real-time monitoring dashboards, while the read-only (get, list, watch) verbs allow product managers and other stakeholders to check deployment status without the risk of accidental changes.

Service account manager for team autonomy

Teams deploying applications need custom service accounts with the right permissions. Creating operations tickets for every service account request can be tedious, so you can give teams the ability to create their own within their respective namespaces.

The Role below allows teams to create and manage service accounts:

The RBAC Role above enables teams to manage their own service accounts and RoleBindings within their namespace, which is crucial for teams deploying applications with specific permission requirements.

The rolebindings permission enables them to create new RoleBindings, granting their service accounts access to namespace resources, but they cannot escalate beyond their namespace limits.

ClusterRoles

ClusterRoles work differently from Roles. They provide access to cluster-wide resources, including nodes and persistent volumes, and enable setting the same permissions across all namespaces. This makes them necessary for managing platform infrastructure that spans your whole cluster.

ClusterRoles aren't just "bigger" Roles. They serve different purposes entirely. You use Roles when you want to keep permissions within the team or application limits, while ClusterRoles are used when you need the same permissions across your entire platform infrastructure.

The following is a ClusterRole for reading Pods across all namespaces:

The ClusterRole above grants read-only access to Pods across all namespaces within the cluster. Unlike a Role that is limited to a single namespace, this ClusterRole operates across the entire cluster.

The more interesting bit is that you can bind a ClusterRole to a specific namespace using a RoleBinding, giving you cluster-defined permissions within a namespace-scoped application. This pattern is essential for platform engineering workflows requiring uniform Role definitions within a controlled scope.

For example, you might define one platform-developer ClusterRole with standardised permissions, then use RoleBindings to apply it selectively:

This RBAC gives the developers group the exact same permissions in development that they'd have anywhere else, but scoped only to that namespace. This way, you maintain consistency without granting cluster-wide access.

ClusterRole patterns for platform teams

Platform teams need a set of standard ClusterRole patterns to manage and secure Kubernetes environments effectively. These patterns are essential for providing team members with the proper access levels to perform their duties efficiently while safeguarding the cluster's security and integrity.

Here are some of the most common ClusterRole patterns you will probably use in a production environment.

Read-only cluster observer

You can't monitor what you can't see. Your Prometheus deployment needs to scrape metrics from Pods across all namespaces. Your logging system needs to correlate events between different teams' applications. Without cluster-wide visibility, you'll have dangerous blind spots in your observability stack.

As such, platform teams often need monitoring and observability tooling with read access across namespaces:

The ClusterRole defined above allows read-only access to key Kubernetes resources like Pods, Services, Deployments, and Ingresses across all namespaces. It uses verbs like get, list, and watch to enable monitoring tools to observe the state and changes of these resources.

One thing to notice is that secrets are not included in this list. This is intentional. Granting get or list access to Secrets would allow tools to read sensitive information like passwords, API keys, or TLS certificates, which is a security risk.

Most monitoring systems don’t need to see the actual content of secrets. Instead, they can simply verify that a secret exists and track changes to it using safer resources like ConfigMaps or metadata from list operations.

Note: The watch verb enables monitoring tools to track real-time changes in resources, which keeps live dashboards and alert systems updated.

Pod management across namespaces

Imagine it’s 3 AM, and your monitoring system starts firing alerts. Pods are crashing, and the issue spans multiple teams’ namespaces. As a platform operator, you don’t have time to chase down individual access permissions or wait for team leads to grant temporary access. You need immediate, read-level visibility into all Pods across the entire cluster so you can diagnose and resolve the issue before it escalates.

Platform operators often need to manage the Pods cluster-wide for debugging and maintenance. Below is an example of how you’d do this:

The subresources pods/log, pods/exec, and pods/portforward make this ClusterRole very useful for operational tasks.

Without pods/log, you can't run kubectl logs.

Without pods/exec, you can't troubleshoot issues inside containers.

Without pods/portforward, you can't access services for debugging.

Access to events is just as important. When a Pod fails, the events often provide the only clues about what went wrong. Platform teams need this cluster-wide visibility to debug issues across all namespaces efficiently.

Node administration ClusterRole

Infrastructure teams are responsible for keeping the core of the Kubernetes cluster running smoothly.

When traffic increases, they add new nodes to handle the higher workload. Storage issues, such as persistent volumes that fail to mount, require troubleshooting and fixes. They handle cluster upgrades and patches while keeping the system stable to prevent outages.

Because these tasks involve resources that exist at the cluster level (like nodes, persistentvolumes, and storageclasses), infrastructure teams need ClusterRoles that grant access to these cluster-scoped resources.

Notice we're granting read-only access to RBAC resources. This allows infrastructure admins to understand the permission landscape. They can see what ClusterRoles exist and who they're bound to, without accidentally modifying permissions. This separation is intentional because infrastructure and security management often require different approval processes and oversight.

The full permissions on nodes and storage resources reflect the infrastructure team's responsibility for cluster capacity and storage provisioning, while the restricted RBAC access maintains proper security boundaries.

After applying these ClusterRoles, verify they have been created:

Check the details of a specific ClusterRole using the command below:

You should see an output similar to this:

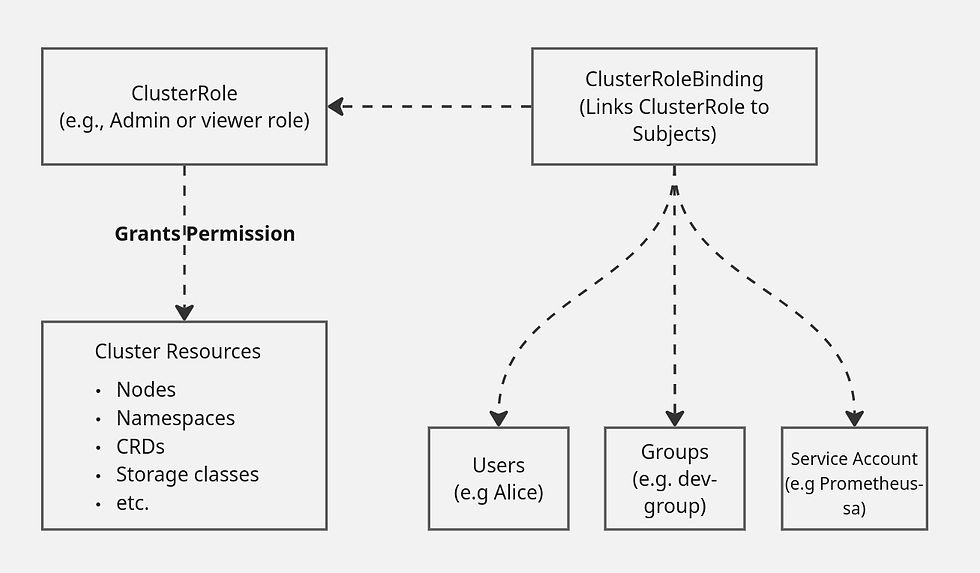

ClusterRoleBinding: connecting permissions to subjects

ClusterRoleBindings connect your carefully crafted ClusterRoles to actual users, groups, and service accounts. Get this wrong, and you'll either lock everyone out or accidentally give someone the keys to your entire cluster.

Think of it this way: ClusterRoles define what can be done (the permissions), while ClusterRoleBindings determine who can do it.

The diagram below shows this relationship: a ClusterRole grants permissions to cluster resources, and a ClusterRoleBinding connects that role to specific users, groups, or service accounts.

ClusterRoleBindings pose significant security risks because they operate across your entire platform. Bind a service account to a powerful ClusterRole, and that account gains access to resources in every namespace. Getting this wrong can expose your whole cluster, so you need to be extra cautious with these bindings.

Common ClusterRoleBinding patterns include:

Binding to individual users

Sometimes you need to grant cluster-wide access to specific people right away. For instance, a platform engineer dealing with an emergency outage or an architect debugging problems that affect multiple environments. Individual user bindings are the right approach when you need controlled access for particular users.

The following ClusterRoleBinding grants platform admin access to specific users:

The ClusterRoleBinding above gives both Alice and Bob full administrative access to the platform infrastructure across all namespaces.

Note: Use individual user bindings sparingly as they're hard to audit and manage at scale. This pattern works for a small number of senior platform administrators, but becomes unwieldy as teams grow.

Binding to groups for team access

Most platform teams prefer managing access via groups instead of individual users. For example:

From the ClusterRole above, both the SRE team and the platform engineering groups now have the read-only monitoring permissions defined in the platform-observer ClusterRole across all namespaces.

Group-based access is the preferred method for granting access to human users. When someone joins or leaves the SRE team, you update group membership in your identity provider rather than modifying Kubernetes bindings. This separation of concerns makes access management more scalable and auditable.

Service account bindings for automation

Let’s say your platform runs dozens of automated tools that require seamless operation without human intervention.

For example, Prometheus scrapes metrics from every namespace. ArgoCD deploys applications across environments. Your backup system reads persistent volumes across the entire cluster. These tools can't ask for permission every time they need access.

Because these automation tools run as Kubernetes service accounts, you must bind them to ClusterRoles that grant the necessary permissions across the entire cluster:

From the ClusterRoleBinding above, both the Prometheus operator and Grafana agent service accounts now have cluster-wide read access to observe all resources across every namespace.

Service account bindings are often where security risks can hide. Unlike human users, service accounts can't decide what access is appropriate. When you bind the platform-observer ClusterRole to the prometheus-operator service account, it can read resources in all namespaces, including potentially sensitive data in namespaces it shouldn't access.

When you bind a service account to a ClusterRole, it gets every single permission that role has; therefore, ensure you grant only the permissions it truly needs to perform its function, and avoid issuing unnecessary permissions for convenience.

Multi-tenant platform demo

To understand how ClusterRoles and ClusterRoleBindings work in practice, let’s walk through a real-world scenario that mirrors what many platform engineering teams implement: a multi-tenant internal platform.

In a multi-tenant setup, different development teams work in isolated namespaces, while shared infrastructure tools and services need access across the entire cluster. Managing RBAC in such environments requires a balance between isolation and shared access, and ClusterRoles play a key role in that balance.

This demo shows how a single ClusterRole can work in different ways depending on how you bind it. You get per-team isolation and global visibility for shared tools using the same role.

The scenario:

You have two development teams that need complete control within their own namespaces. Your shared services, such as CI/CD pipelines and monitoring agents, require similar access, as they operate across all namespaces.

The diagram below shows how you can reuse the same ClusterRole with different types of bindings — RoleBinding for namespace-scoped access and ClusterRoleBinding for cluster-wide access — to achieve the desired access patterns cleanly and securely.

Step 1: Create the namespace structure

Teams need their own space but also access to shared resources. Creating these boundaries prevents teams from accidentally disrupting each other's work while allowing them to utilise shared services.

Here are the commands:

You should get a similar output:

Step 2: Define platform-level ClusterRoles

You don't want to create a separate role for every team; that's too many roles to keep track of. Standard ClusterRoles work across namespaces, so teams can reuse the same permissions without creating duplicates all over your cluster.

To do this, apply these ClusterRoles to your cluster:

The platform-developer ClusterRole gives teams full control over common development resources, while the platform-shared-services-reader provides read-only access to specific shared resources.

Step 3: Create team-specific access

Now we'll connect our ClusterRoles to specific namespaces using RoleBindings. This is the key to making multi-tenancy work: each team receives the same permissions, but only within their designated areas.

To do this, create the RoleBindings for team-alpha:

This YAML manifest creates effective isolation because each RoleBinding only grants permissions within its own namespace.

The team-alpha group gets full development permissions in the team-alpha namespace, but only read access to specific resources in shared-services. They get no permissions at all in the team-beta namespace.

This works because RoleBindings limit ClusterRoles to just that namespace. So even though platform-developer is technically a ClusterRole that could work anywhere, the RoleBinding locks it down to only work in that specific namespace.

You benefit from reusing the same permission set across different places, while avoiding accidental over-access.

Step 4: Set up cross-namespace operations

Platform services often cross namespace boundaries to do their work. Your backup system must access different namespaces, security scanners check resources everywhere, and compliance tools audit across the entire cluster. These services need their own service account with the right level of access.

To create this, apply the cross-namespace configuration:

The platform-cross-namespace-operator ClusterRole enables reading specific secrets and managing ConfigMaps across all namespaces, making it ideal for platform tooling that requires limited cluster-wide access.

Step 5: Application-specific service account setup

Applications require service accounts with the appropriate permissions for their specific tasks.

Your web application needs to read secrets and configmaps, but shouldn't delete Pods. Batch jobs may need to spin up temporary Pods, but they should not have access to secrets from other applications.

Here is how to set up the RBAC configuration for these applications:

The web-app-permissions Role grants the application read-only access to its specific secrets and ConfigMaps; nothing more. The deployment automatically uses this service account, ensuring the application runs with minimal required permissions.

Here's what you end up with:

Teams remain within their designated areas and do not interfere with each other's work.

Everyone can still read the shared configs they need, but they can't change them

Your platform service account can perform its cross-namespace tasks without being overwhelmed.

Applications receive only the necessary permissions, strictly following the principle of least privilege.

Clear boundaries are set to stop any unauthorised access elevation.

Step 6: Verify the multi-tenant setup

Make sure all your RoleBindings are set up correctly:

Your output should be similar to:

Verify the service account has cluster-wide permissions:

You should get the following output:

Test the permissions work as expected:

Troubleshooting and security best practices

When ClusterRole permissions go wrong, the impact is cluster-wide. Below are essential debugging commands and patterns to help you troubleshoot effectively and enforce good security hygiene.

Check what a user can do

Before anything else, confirm what a user is allowed to do. This helps you validate whether RBAC rules are working as expected.

This returns a list of actions the specified user can perform across the cluster.

Test specific permissions

To check whether a user has permission to perform a specific action (like creating Pods), you can run:

If the answer is “no,” the user may be missing a RoleBinding or ClusterRoleBinding.

Inspect ClusterRoles and ClusterRoleBindings

Sometimes, the problem isn’t with the user; it is with the roles themselves. Use the following commands to see what ClusterRoles exist and how they’re bound:

Find who has access to a specific ClusterRole

Need to find all bindings connected to a particular user or service account? Use this JSON query with jq:

This helps you trace indirect access to cluster-scoped permissions, especially useful for auditing or debugging shared automation.

Security best practices

Skip wildcards in ClusterRoles: List specific resources and verbs instead of using asterisks (*).

Lock down sensitive resources: Use ResourceNames for specific secrets or configmaps.

Audit regularly: Review ClusterRoleBindings monthly for permissions that are no longer needed.

Keep platform and application permissions separate: Don’t mix cluster administration with application deployment rights..

The biggest mistake you can make is giving everyone the cluster-admin ClusterRole because it's easier than figuring out specific permissions. This grants unlimited access to everything in your cluster. Instead, create specific ClusterRoles for each function your platform team truly needs.

Getting your platform architecture right

ClusterRoles and ClusterRoleBindings aren’t just Kubernetes primitives. They’re the foundation that makes modern platform engineering actually work.

When building internal platforms that serve multiple teams across different namespaces, RBAC patterns are crucial, as they determine whether the platform is secure and scalable or a security nightmare waiting to happen.

Modern platform teams are building self-service internal platforms where developers can provision databases, CI/CD pipelines, and other resources on demand. Frameworks like Kratix help teams build these platforms on Kubernetes, providing the structure and workflows for delivering secure, reusable services at scale.

When building these platforms, the RBAC patterns we’ve covered today become essential. Your platform services need ClusterRoles with precise permissions. Your automation needs service accounts with carefully scoped access. Without these foundations, your platform becomes either too restrictive to be useful or too permissive to be secure.

Ready to see how to build secure, self-service infrastructure platforms? Book a demo to discover how Kratix helps platform teams deliver scalable internal platforms with proper security controls.

Comments